Everyone has heard about Jean Piaget’s (1896-1980) theory of the cognitive development of children. But no one knows that his theory placed Europeans at the top of the cognitive ladder with most humans stuck at the bottom — unless Europeans taught them how to think.

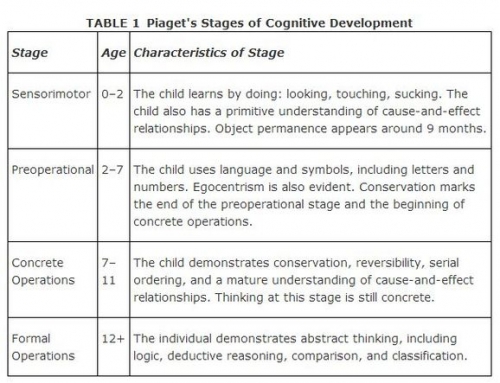

Piaget is widely recognized as the “greatest child psychologist of the twentieth century.” Unlike many other influential figures, Piaget’s discoveries have withstood the test of time. His argument that human cognition develops stage by stage, from sensorimotor, through preoperational and concrete operations, to formal operations, is generally endorsed in psychology and sociology texts as a “remarkably fruitful” model. This is not to deny that aspects of his theory have been revised and supplemented by new insights. One important criticism is that his fixed sequence of clear-cut stages does not always apprehend the overlapping and uneven process in the development of cognition. But even the strongest critics admit that his observations accurately show that substantial differences do exist between the cognitive processes (linguistic development, mental representations of concrete objects, logical reasoning) of children and adults.

Suppression of Piaget’s Cross Cultural Findings

What the general public does not know, and what the mainstream academic world is suppressing, is that many years of cross-cultural empirical research by Piaget and his followers have demonstrated that the stages of mental development of children and adolescents reflect the stages of cognitive evolution “humankind” has gone through from primitive, ancient, and medieval, to modern societies. The cognitive processes of humanity have not always been the same, but have improved over time. The civilizations of the world can be ranked according to the level of cognitive development of their populations. The peoples of the world differ not only in the content of their values, religious beliefs, and ways of classifying things; they differ in the cognitive processes they employ, their capacity to understand, for example, the relation between objects and concepts, their awareness of objective time, their ability to draw inferences from data, and to project these inferences into the hypothetical realm of the future. Most humans throughout history have been “childlike” in their cognitive capacities; they are not able, for example, to recognize contradictions between belief and experience, or to conceive multiple causes for individual events. Europe began to produce adolescents capable of reaching the stage of formal operational reasoning before any other continent, whereas to this day some nations barely manage to produce adults capable of formal operations.

This aspect of the cross-cultural comparative research conducted by Piaget and his associates has been suppressed. Critics interpreted the lack of formal reasoning among adolescents in many non-Western societies as evidence that his model lacked universal application, rather than as further confirmation that his theory of child development, first developed through extensive research on children in the West, could be applied outside the West. Because many critics erroneously assumed that Piaget’s theory was about how all children naturally maturate into higher levels of cognition, they took this lack of cognitive development in pre-modern cultures as a demonstration that different cultural contexts produce different modes of cognitive development. Piaget’s stages, however, should not be seen as stages that every child goes through as they get older. They are not biologically predetermined maturational stages. While there is a teleological tendency in Piaget’s account of cognitive stages, with each of the four stages in a modern environment unfolding naturally as the child ages, this criticism ignored the implications of his cross-cultural studies, which were carried in his later years, and which made it evident that the ability to reach the stage of formal operations depended on the type of science education children received rather than on a predetermined maturation process.

It can be argued, actually, that Piagetian cross-cultural studies made his theory all the more powerful in offering a precise and orderly account of the cognitive psychological development of humankind in world history from hunting and gathering societies through agrarian societies to modern societies. This was not just a theory about children but a grand theory covering the cognitive experience of all peoples throughout history, from primitive peoples with a preoperational mind, to agrarian peoples with a concrete operational mind, to modern peoples with a formal operational mind. One of the rare followers of this cross-cultural research, the German sociologist Georg W. Oesterdiekhoff, observes that “thousands of empirical studies across all continents and social milieus, from the 1930s to the present” (2015, 85) have been conducted demonstrating that, depending on the level of cultural scientific education, the nations of the world in the course of history can be identified as preoperational (which is the stage of children from their second to their sixth or seventh year of life), concrete operational (which is the stage from ages seventh until twelfth years) and formal operational (which is the stage of cognition from twelve years onward).

[3]

Adults living in a scientific culture are more rational (and intelligent) than adults living in pre-modern cultures. For example, according to studies conducted in the 1960s and 1970s, even educated adults living in Papua New Guinea did not reach the formal stage. Australian Aborigines who were still living a traditional lifestyle barely developed beyond a preoperational stage in their adult years. Without a population that has mentally developed to the level of formal operations, which entails a capacity to think about abstract relationships and symbols without concrete forms, a capacity to grasp syllogistic reasoning, comprehend algebra, formulate hypotheses, there can be no modernization

However, despite all the studies confirming Piaget’s powerful theory, from about 1975-1980 a “wave of ideological attacks” was launched across the Western academic world against any notion that the peoples of the Earth could be ranked in terms of their cognitive development. According to Oesterdiekhoff, “nearly all child psychologists of the first two generations of developmental psychology knew about the similarities between children and pre-modern man,” but “due to anti-colonialism, student revolt, and damaged self-esteem of the West in consequence of the World Wars this theory as the mainstream spirit of Western sciences and public opinion declined gradually” (2014a, 281). As another author observed in 1989, “any suggestion that the cognitive processes of the older child might posses any similarities to the cognitive processes of some primitive human cultures is regarded as being beneath contempt” (Dan Le Pan, 1989).

I came across Oesterdiefkhoff’s research after a long search through Piagetian theory. I was wondering what his stage theory might have to say about the cognitive development of peoples in history. But I could find only sources of Piaget as a cognitive psychologist of children as such, not as a grand theorist of the cognitive development of humanity across world history — until I came to Oesterdiefkhoff’s many publications, which draw on pre-1975 Piagetian research and current research. This research, as Oesterdiefkhoff notes, “no longer belong to the center of attention and research interests. Most social scientists have never heard about these researchers and have only a very scanty knowledge of them” (2014a, 280).

Oesterdiefkhoff is very blunt and ambitious in his arguments. It is about why Piagetian theory is “capable of explaining, better than previous approaches, the history of humankind from prehistory through ancient to modern societies, the history of economy, society, culture, religion, philosophy, sciences, morals, and everyday life” (2014a). He believes that the rise of formal operational thinking among Europeans was the decisive factor in the rise of modern science, enlightenment, industrialism, democracy, and humanism in the West. The reason why India, China, Japan, and the Middle East did not start the Industrial Revolution “lies in their inability to evolve the stage of formal operations” (2014a).

Primitive and pre-modern peoples cannot be described as having a similar rational disposition as modern peoples because they are at the preoperational and concrete operational stages of cognition. Primitive adults share basic aspects of the preoperational thinking of children no more mature than eight years old. Adults in pre-modern civilizations share the concrete operational thinking of 6-12 year olds.

Children and premodern adults share the same mechanisms and basic understandings of physical dimensions such as length, volume, time, space, weight, area, and geometric qualities. Both groups share the animistic understanding of nature and regard stones, mountains, woods, stars, rivers, winds, clouds, and storms as living beings, their movements and appearances as expressions of their will, intentions, and commitment. Premodern humans often manifest the animistic tendencies of modern children before their sixth year. Fetishism and natural religion of premodern humans reside in children’s mentality before concrete operational stage . . . The biggest parts of ancient religions are based on children’s psychology and animism before the sixth year of life (2016, 301).

It is not that adults in primitive and pre-modern cultures are similar to children in modern cultures in their emotional development, experience, and ability to survive in a hostile environment. It is that the reasoning abilities of adults in pre-modern cultures are undeveloped. As Lucien Lévy-Bruhl (1857-1939) had already observed in Primitive Mentality (1923), a work which was recently released (2018) as part of Forgotten Books [4], the primitive mind is devoid of abstract concepts, analytical reasoning, and logical consistency. The objective-visible world is not distinguished from the subjective-invisible world. Dreams, divination, incantations, sacrifices, and omens, not inferential reasoning and objective causal relations, are the phantasmagorical doors through which primitives get access to the intentions and plans of the unseen spirits that they believe control all natural events.

The visible world and the unseen world are but one, and the events occurring in the visible world depend at all times upon forces which are not seen . . . A man succumbs to some organic disease, or to snake-bite; he is crushed to death by the fall of a tree, or devoured by a tiger or crocodile: to the primitive mind, his death is due neither to disease nor to snake-venom; it is not the tree or the wild beast or reptile that has killed him. If he has perished, it is undoubtedly because a wizard had “doomed” and “delivered him over”. Both tree and animal are but instruments, and in default of the one, the other would have carried out the sentence. They were, as one might say, interchangeable, at the will of the unseen power employing them (2018, 438).

I have reservations about the extent to which the rise of operational thinking on its own can explain the uniqueness of Western history (as I will explain in Part II), but I agree that without children or adolescents reaching the stage of operational thinking, there can be no modernization. The study of the geographical, economic, or cultural factors that led to the rise of science and the Industrial Revolution are not the matters we should be focusing on. The rise of a “new man” with psychogenetic capacities — psychological processes, personality, and behavior — for formal operational reasoning needs direct attention if we want to understand the rise of modern culture.

Cultural Relativism

But first, it seems odd that Oesterdiefkhoff holds two seemingly diametrical outlooks, “cultural relativism and universality of rationality,” responsible for the discrediting of Piagetian cross cultural theory. He does not explain what he means by “universality of rationality.” We get a sense that by “cultural relativism” he means the rejection of the unreserved confidence in the superiority of Western scientific rationality. Social scientists after the Second World War did become increasingly ambivalent about setting up Western formal thinking as a benchmark to judge the cognitive processes and values of other cultures, even though the non-Western world was happily embracing the benefits of Western science and technology.

The pathological state to which this relativism has affected Western thinking can be witnessed right inside the otherwise hyper-scientific field of cognitive psychology today. Take the very well known textbook, Cognitive Psychology [5] (2016), by IBM Professor of Psychology at Yale University, Robert Sternberg; it approaches every subject in a totally scientific and neutral manner — except the moment it touches the subject of intelligence cross-culturally, when it immediately embraces a relativist outlook informing students that intelligence is “inextricably linked to culture” and that it is impossible to determine whether members of “the Kpelle tribe in Africa” have less intelligent concepts than a PhD cognitive psychologist in the West. Intelligence is “something that a culture creates to define the nature of adaptive performance in that culture and to account for why some people perform better than others on the tasks that the culture happens to value.” It is “so difficult,” it says, to “come up with a test that everyone would consider culture-fair — equally appropriate and fair for members of all cultures” (503-04).

If members of different cultures have different ideas of what it means to be intelligent, then the very behaviors that may be considered intelligent in one culture may be considered unintelligent in another (504).

This textbook pays detailed attention to the scientific achievements of Piaget, but portrays him as someone who investigated the “internal maturation processes” of children as such, without considering his cross-cultural findings, which clearly suggest that children in less developed and less scientific environments do not mature to the formal stage. Pretending that such findings do not exist, the book goes on to criticize Piaget for ignoring “evidence of environmental [cultural] influences on children’s performance.”

I am not suggesting that cultural relativism has not taken over Western sciences in the way it has the humanities, sociology, history, and philosophy. But there is no denying this relativism is being effectively used by scientists against any overt presumption by Western scientists that their knowledge is “superior” to the knowledge of African tribes and Indigenous peoples. No cognitive psychologist is allowed to talk about the possible similarities between the minds of children and the minds of adult men in pre-modern cultures.

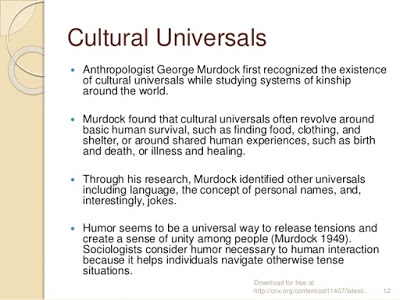

Cultural Universals

Oesterdiefkhoff does not define “universality of rationality,” but we can gather from the literature he uses that he is referring to other anthropologists who argue that all humans are rationally inclined; primitive and pre-modern peoples are not “illogical” or “irrational.” The “actual structures of thought, cognitive processes, are the same in all cultures.” What differs are the “superstructural” values, religious beliefs, and ways of classifying things in nature. Primitive peoples, Islamic and Confucian peoples, were quite rational in the way they went about surviving in the natural world, making tools, building cultures, and enforcing customs that were “adaptive” to their social settings and environments. They did not develop science because they had different priorities and beliefs, and were less obsessed with mastering nature and increasing production.

The anthropologist Claude Lévi-Strauss, and the sociologist Émile Durkheim, were the first to argue that the primitive mind is “logical in the same sense and same fashion as ours” and that the only difference lies in the classification systems and thought content. George Murdock and Donald Brown, in more recent times, came up with the term “cultural universals” (or “human universals [6]“) to refer to patterns, institutions, customs, and beliefs that occur universally in all cultures. These universals demonstrated, according to these anthropologists, that cultures differ a lot less than one might think by just examining levels of technological development. Murdock and Brown pointed to strong similarities in the gender roles of all cultures, the common presence of the incest taboo, similarities in their religious and healing rituals, mythologies, marriage rules, use of language, art, dance, and music.

This idea about the universality of rationality and “cultural universals” was subsequently elaborated in a more Darwinian direction by evolutionary psychologists. Evolutionary psychology is generally associated with “Right wing” thinking, in contrast to cultural relativism, which is associated with “Left wing” thinking. Evolutionary psychologists like E. O. Wilson and Steven Pinker hold that these cultural universals are naturally selected, biologically inherited behaviors. They believe that rationality is a naturally inherited disposition among all humans, though they don’t say that the levels of knowledge across cultures are the same. Humans are rational in the way they go about surviving and co-existing with other humans. These universals were selected because they enhanced the adaptability of peoples to their environments and improved the group’s chances of survival. Some additional cultural universals observed in all human cultures are bodily adornment, calendars, cooperative labor, cosmology, courtship, divination, division of labor, dream interpretation, food taboos, funeral rites, gift-giving, greetings, hospitality, inheritance rules, kin groups, magic, penal sanctions, puberty customs, residence rules, soul concepts, and status differentiation.

Evolutionary psychologists are convinced that the existence of cultural universals amount to a refutation of the currently “fashionable” notion that all human behaviors, including gender differences, are culturally determined. But if the West is very similar to other cultures, why did modern science develop in this civilization, including liberal democratic values? Evolutionary psychologists search for general explanations — the notion of cultural universals meets this criteria, Western uniqueness does not; therefore, they either ignore this question or reduce Western uniqueness to a concatenation of historical factors, varying selective pressures, and geographical good luck. They point to how modern science has been assimilated by multiple cultures, from which point they argue that science is not culturally exceptional to the West but a universal method that produces universal truths “for humanity.”

Can one argue that universalism is a cultural attribute uniquely Western and therefore relative to this culture?

Piagetian Universalism and IQ Convergence

Piagetian theory is also universalist in maintaining that all cultures are now reaching the stage of formal operational thinking. The West merely initiated formal reasoning. More than this, according to Oesterdiefkhoff, this cognitive convergence is happening across all the realms of social life, because changes in the cognitive structures of humans bring simultaneous changes in the way we think about politics and institutional arrangements. The more rational we become, the more we postulate enlightened conceptualizations of government in opposition to authoritarian forms. Drawing on the extension of Piagetian theory to explain the moral development of humans (initiated by Piaget and elaborated by Lawrence Kohlberg), Oesterdiefkhoff writes that once humans reach stage four, they start to grasp “that rule legitimacy should follow only from a correct rule installation, that is, from the choices of the players involved” (2015, 88).

Thus, they regard only rules correctly chosen as obliging rules. Only democratic choices install legitimate rules. Youth on the formal stage surmount therefore the holy understanding of rules by the democratic understanding. They replace an authoritarian understanding of rules, laws, and customs by a democratic one. Thus, they invent democracy in consequence of their cognitive maturation (2015, 88-9).

The emergence of the adolescent stage of formal operations gave birth not only to the new sciences after 1650 but also to philosophers such as Locke, Montesquieu, and Rousseau, who formulated the basic principles of constitutional government, representative institutions, and religious tolerance. Extensive cross-cultural research has shown that “children do not understand tolerance for deviating ideas, liberty rights for individuals, rights of individuals against government and authority, and democratic legitimacy of governments and authorities” (2015, 93). They are much like the adults of premodern societies, or current backward Islamic peoples, who take “laws and customs as unchangeable, eternal, and divine, made by god and not modifiable by human wishes or choices” (2015, 90).

This argument may seem similar to Francis Fukuyama’s thesis that modernizing humans across the world are agreeing that liberal-democratic values best satisfy the longing humans have for a state that recognizes the right of humans to pursue their own happiness within a constitutional state based on equal rules. The difference, a crucial one, is that for Fukuyama the rise of democracy came from the articulation and propagation of new ideas, whereas for Oesterdiefkhoff psychogenetic maturation is a precondition of democratic rule. Adults who were raised in a pre-modern culture and have a concrete operational mind can “never surmount” this stage, no matter how many books they read about the merits of liberal democracy. These adults will lack the appropriate ontogenetic development required for a democratic mind.

The absence of stimuli and forces of modern culture during early childhood in premodern cultures prevents later psychological development from going beyond certain stages . . . Unused developmental opportunities in youth stop the development of the nervous system, thus preventing psychological advantages in later life. This explains why education and enlightenment, persuasion and media programs could not draw adult premodern people out of their adherence to magic, animism, ordeal praxis, ancestor worship, totemism, shamanism, and belief in witches. Such people, moving in adulthood to modern milieus, cannot surmount their anthropological structures and their deepest emotions and convictions (2016, 306-7).

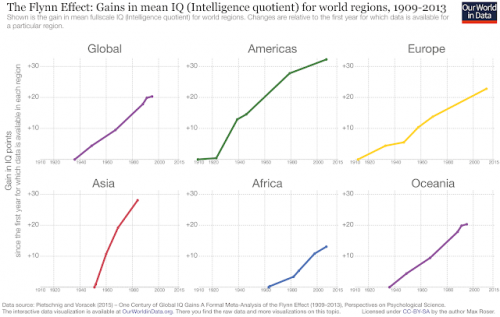

Moreover, according to Oesterdiefkhoff, with the attainment of higher Piagetian stages come higher IQ levels. Psychogenetic differences, not biological genetic differences, are the decisive factor. “All pre-modern peoples stood on intelligence levels of 50 to 70 [IQ points] or on preoperational or concrete operational levels, no matter from what race, culture or continent they have come” (2014b, 380).

Not only the Western nations, but all modernizing nations have raised their scores. The rises in stage progression and IQ scores express the greatest intelligence transformations ever in the history of humankind and stem solely from changes in culture and education. When Africans, Japanese, Chinese and Brazilians have raised their intelligence so dramatically, where is the evidence for huge genetic influences? Huge genetic influences might be assumed if Europeans had always had higher intelligence and if African, Indians, Arabs and Vietnamese had been unable to raise their intelligence to levels superior to that of Europeans 100 years ago. But Latin Americans and Arabs today do have higher IQ scores than Europeans had 100 years ago . . . Where is the leeway for genetic influences to affect national intelligence differences? (Ibid).

IQ experts would counter that only psychometric data about levels of heritable general intelligence can explain the rise of formal operational thinking. But even if we agree that a gap in IQ scores between American blacks and American whites has remained despite the Flynn effect [9] and similar levels of education and income, it is very hard to attribute the remarkable increases in IQ identified over the last century to heredity. Oesterdiefkhoff’s argument that “all modernizing nations have raised their IQ scores,” and that operational thinking has been central to this modernization, is a strong one.

Formal Reasoning is not a Cultural Universal

The stage of formal operations cannot be said to be a biologically primary ability that humans inherit genetically. They are secondary biological abilities requiring a particular psycho-cultural context. Formal thinking came to be assimilated by other nations (most successfully in east Asian nations with an average high IQ, but far less so in sub-Saharan nations where to this day witchcraft prevails [10]). The abilities associated with the first two stages (e.g., control over motor actions, walking, mental representation of external stimuli, verbal communication, ability to manipulate concepts), have been acquired universally by all humans since prehistorical times. These are biologically primary qualities that children across cultures accomplish at the ages and in the sequence more or less predicted by Piaget. They can be said to be universal abilities built into human nature and ready to unfold with only little educational socialization, explainable in the context of Darwinian evolutionary psychology. These cognitive abilities can thus be identified as “cultural universals.”

The concrete-operational abilities of stage three (e.g., the “ability to conserve” or to know that the same quantity of a liquid remains when the liquid is poured into a differently shaped container) are either lacking in primitive cultures or emerge at later ages in children than they do in modern cultures. These cognitive abilities may also be described as biologically primary, as skills that unfold naturally as the child matures in interaction with adult members of the society. In modern societies, all individuals with a primary education acquire concrete operational abilities. The aptitudes of this stage can be reasonably identified as universally present in all agrarian cultures.

This is not the case at all with formal operational skills. The skills associated with this stage (inductive logic, hypothesis testing, reasoning about proportions, combinations, probabilities, and correlations) do not come to humans naturally through socialization. There is abundant evidence that even normally intelligent college students with a long background in education have great difficulties distinguishing between the form and content of a syllogism, as well as other types of formal operational skills. Oesterdiefkhoff acknowledges that

Only when human beings are exposed to forces and stimuli typical of modern socialization and culture do they progress further and develop the adolescent stage of formal operations (2016, 307).

But, again, as it has been observed by critics of Piaget, even in modern societies where children inhabit a rationalized environment and adolescents are taught algebra and a variety of formal operational skills, many students with a reasonable IQ find it difficult to think in this way. According to P. Dasen (1994), only one-third of adults ever reach the formal operational stage. Evolutionary psychologists have thus disagreed with the idea that this stage is bound to unfold among most humans as they get older as long as they get a reasonably modern education. There are many “sub-stages” within this stage, and the upper stages require a lot of schooling and students with a keen interest and intelligence in this type of reasoning. This lack of universality in learning formal operational skills has persuaded evolutionary psychologists to make a distinction between the biologically primary abilities of the first three stages and the biological secondary abilities of stage four. Formal reasoning is principally a “cultural invention” requiring “tedious repetition and external motivation [11]” for students to master it.

If the ability to engage in formal thinking is so particular, a biologically secondary skill in our modern times, would it not require a very particular explanation to account for the origins of this cognitive stage in an ancient world devoid of a modern education? If the rise of “new humans” with a capacity for formal thinking was responsible for the rise of the modern world, and the existence of a modern education is an indispensable requirement in the attainment of this stage among a limited number of students, how did “new humans” grow out of a pre-modern world with a lower average IQ?

In the second part of this article, it will be argued that Europeans reached stage four long before any other people on the planet because Europeans began an unparalleled intellectual tradition of first-person investigations into their conscious states. This is a type of self-reflection in which European man began to ask who he is, how does he know that he is making truthful statements, what is the best life, and if he is being self-deceived in his beliefs and intentions. This is a form of self-knowledge that was announced in the Delphic motto “know thyself.” It would be an error, however, to describe the beginnings of this self-consciousness as a relation to something in oneself (an I or an ego) from which a predicate, or an outside, to which the subject relates, is derived. The emergence of the first-person consciousness of Europeans did not emerge outside the being-in-the world of the aristocratic community of Indo-Europeans. Europeans began a quest for rationally justified truths, for objective standards of justification, and for the realization of the good life in a reflective self-relation, coupled with socially justified reasons about what is morally appropriate.

References

Brown, Donald (1991). Human Universals. Philadelphia: Temple University Press.

Dasen, P. (1994). “Culture and cognitive development from a Piagetian perspective.” In W. J. Lonner & R. S. Malpass (Eds.), Psychology and culture. Boston: Allyn and Bacon.

Genovese, Jeremy (2003). “Piaget, Pedagogy, and Evolutionary Psychology.” Evolutionary Psychology, Volume 1: 217-137.

LePan, Donald. (1989). The Cognitive Revolution in Western Culture. London: Macmillan Press.

Lucien Lévy-Bruhl (2018). Primitive Mentality [1923]. Forgotten Books.

Oesterdiekhoff, Georg W. (2014a). “The rise of modern, industrial society. The cognitive developmental approach as key to disclose the most fascinating riddle in history.” The Mankind Quarterly, 54, 3/4, 262-312.

Oesterdiekhoff, Georg W. (2016). Child and Ancient Man: How to Define Their Commonalities and Differences Author(s). The American Journal of Psychology, Vol. 129, No. 3, pp. 295-312.

Oesterdiekhoff, Georg W. (2012). Was pre-modern man a child? The quintessence of the psychometric and developmental approaches. Intelligence 40: 470-478.

Oesterdiekhoff, Georg W (2014b). “Can Childlike Humans Build and Maintain a Modern Industrial Society?” The Mankind Quarterly 54, 3/4, 371-385.

Oesterdiekhoff, Georg W (2015). “Evolution of Democracy. Psychological Stages and Political Developments in World History” Cultura: International Journal of Philosophy of Culture and Axiology 12 (2): 81-102.

Stenberg, Robert (2003). Cognitive Psychology. Nelson Thompson Learning. Third Edition.

This article was reproduced from the Council of European Canadians [12] Website.

del.icio.us

del.icio.us

Digg

Digg