Les trois menaces mortelles contre la civilisation européenne

par Guillaume Faye

Ex: http://www.gfaye.com

La première menace est démographique et migratoire et elle a l’Afrique comme visage principal et notre dénatalité comme toile de fond. La seconde menace est l’islam, comme au VIIe siècle mais pis encore. La troisième menace, cause des deux premières, provient de l’oligarchie polico-médiatique qui infecte l’esprit public et paralyse toute résistance.

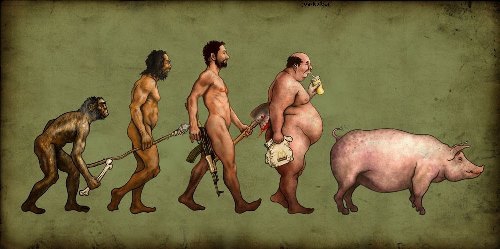

La démographie est la seule science humaine exacte. La vigueur démographique d’un peuple conditionne ses capacités de prospérité et d’immigration hors de son territoire. La faiblesse démographique d’un peuple provoque une immigration de peuplement chez lui, ainsi que son déclin global de puissance, de rayonnement et de prospérité. Et à terme, sa disparition.

Le risque majeur qui pèse sur l’Europe ne provient pas d’une soumission aux Etats-Unis, dont on peut toujours se libérer, mais de la conjonction de deux facteurs : une pression migratoire gigantesque en provenance majoritaire d’Afrique (Afrique du Nord et Afrique noire) corrélée à une dénatalité et à un vieillissement démographique considérables de l’Europe. Et, avec en prime, l’islamisation des sociétés européennes, conduite à marche forcée.

La bombe démographique africaine

L’Afrique dans son ensemble comptait 200 millions d’habitants en 1950 ; le milliard a été dépassé en 2010 et l’on va vers 2 milliards en 2050 et 4 milliards en 2100. Il y a quatre siècles, l’Afrique représentait 17% de la population mondiale et seulement 7% en 1900, à cause de l’expansion démographique de l’Europe et de l’Asie et de sa stagnation. Puis, à cause de la colonisation (de ”civilisation ” et non pas de peuplement) par les Européens, l’Afrique a connu une irrésistible poussée démographique, du fait de la baisse considérable de sa mortalité infantile et juvénile provoquée par l’hygiène, la médecine et l’amélioration alimentaire dues à la colonisation. Ce point est soigneusement caché par l’idéologie dominante repentante qui fustige le ”colonialisme”. C’est cet affreux colonialisme qui a donc permis à l’Afrique toute entière de décoller démographiquement – ce qui provoque la menace migratoire actuelle. Et, après les indépendances (années 60), l’Afrique a continué à bénéficier massivement d’assistances médicales et alimentaires de la part des pays occidentaux. Ce qui a permis la continuation de son boom démographique.

En 2014, l’Afrique représentait 16% de la population mondiale (1,138 milliards d’habitants sur 7,243 milliards) en augmentation constante. L’indice de fécondité, le nombre moyen d’enfants nés par femme, y est de 4,7, le taux le plus fort au monde. La moyenne mondiale est de 2,5. En Europe, il est inférieur à 1,5, le seuil de simple renouvellement des générations étant de 2,1. Le continent africain contient non seulement la population la plus prolifique, mais la plus jeune de la planète : 41% ont moins de 15 ans et l’âge médian est de 20 ans. Donc la natalité est exponentielle, en progression géométrique ; l’Ouganda et le Niger sont les pays les plus jeunes du monde : 49% de moins de 15 ans.

Mais l’espérance de vie est aussi la plus faible au monde : 57 ans contre 69 ans de moyenne mondiale. Cela n’obère pas la reproduction et garantit l’absence de vieillards à charge. En 1960, deux villes d’Afrique seulement dépassaient le million d’habitants, 25 en 2004, 57 aujourd’hui ! C’est dire l’ampleur choc démographique. L’Afrique est donc une bombe démographique, plus exactement un énorme réservoir percé qui commence à se déverser sur l’Europe. Sans que cette dernière ne fasse rien.

Dépopulation, vieillissement et invasion migratoire de l’Europe

En Europe, la situation est exactement l’inverse : dénatalité et vieillissement. En incluant la Russie mais pas la Turquie, l’Europe comptait 742 millions d’habitants en 2013, dont 505,7 millions dans l’Union européenne – immigrés extra-européens compris. La très faible croissance démographique de l’Europe n’est due qu’à l’immigration et à la natalité supérieure des immigrés, mais le nombre d’Européens de souche ne cesse de diminuer. L’Europe représente 10,3% de la population mondiale, contre 25% en 1900, date de l’apogée absolue de l’Europe dans tous les domaines sur le reste du monde. Cette suprématie fut cassée par les deux guerres mondiales. En 1960, l’Europe représentait encore 20% de la population mondiale, mais la chute de la natalité débuta dans les années 70, en même temps que le démarrage des flux migratoires en provenance d’Afrique et d’Orient. La table était mise.

La moyenne d’âge est aujourd’hui de 38 ans en Europe et sera – si rien ne change– de 52, 3 ans en 2050 ( étude de la Brookings Institution). Le taux de fécondité s’est effondré en dessous du seuil de renouvellement des générations (2,1). En France, il est de 2, le plus fort d’Europe, mais uniquement grâce à la natalité immigrée, notre pays étant celui qui héberge et reçoit le plus d’allogènes. En Grande-Bretagne, le taux de fécondité est de 1,94, second au classement, tout simplement parce que ce pays ”bénéficie” de la natalité immigrée, juste derrière la France.

En Allemagne, le taux de fécondité s’est affaissé à 1,38 enfants par femme ; les projections indiquent que l’Allemagne, pays le plus peuplé d’Europe (81,8 millions d’habitants), en vieillissement continu, ne comptera plus en 2050 que 75 millions d’habitants, dont une proportion croissante d’extra-Européens. L’Italie connaît une situation préoccupante : c’est là où l’infécondité et le vieillissement sont les plus forts. C’est en Ligurie (Nord-Ouest) que le rapport population âgée/population jeune est le plus fort au monde, et Gênes est la ville qui se dépeuple le plus parmi les métropoles européennes : la mortalité – par vieillesse –y est de 13,7 pour 1000, contre une natalité de 7,7 pour 1000.

Parlons de la Russie. La Fédération a connu son pic de population à 148, 689 millions d’habitants en 1990 et a baissé à 143 millions en 2005, la Banque mondiale estimant qu’en 2050, le pays ne compterait plus que 111 millions d’habitants (– 22%). Situation catastrophique. L’explication : un indice de fécondité très faible et une surmortalité chez les hommes de la population active. Mais, surprise : en 2012, la Russie a connu un accroissement net de sa population pour la première fois depuis 1992, et pas du tout à cause de l’immigration. Grâce à qui ? À M. Poutine et à sa politique nataliste.

Risque de déclassement et de paupérisation économiques

Mais, s’il se poursuit, ce déclin démographique de l’Europe sera synonyme de déclassement économique, de paupérisation, de perte d’influence et de puissance. En 2005, la population active européenne représentait 11,9% de la population active mondiale. Si rien ne change démographiquement, elle ne sera plus que de 6,4% en en 2050. C’est le recul et le déficit de dynamisme économiques assurés. Le rapport actifs/ retraités, qui approche les 1/1 aujourd’hui ne sera plus, selon le FMI, que de O,54/1 en 2050, soit deux retraités pour un actif. Équation insoluble.

Selon l’OCDE, 39% seulement des Européens de 55-65 ans travaillent, encore moins en France. Le nombre et la proportion des Européens qui produisent ne cesse de baisser, par rapport au reste du monde. D’après le démographe et économiste William H. Frey, la production économique de l’Europe devrait radicalement diminuer dans les 40 ans à venir. En 2010, la tranche d’âge des 55–64 ans dépassait déjà celle des 15–24 ans. Selon un rapport du Comité de politique économique de l’Union européenne, la population active de l’UE diminuera de 48 millions de personnes (–16%) et la population âgée inactive et à charge augmentera de 58 millions (+77%) d’ici 2050. Explosif.

En 2030, la population active de l’UE sera de 14% inférieure au niveau de 2002. Il est trop tard pour corriger, même en cas de reprise démographique miraculeuse dans les prochaines années. Les économistes crétins disent que cela va au moins faire refluer le chômage : non, cela va augmenter le nombre de pauvres, du fait de la diminution de l’activité productrice (PIB). En effet, en 2030, la capacité de consommation des Européens (revenu disponible) sera de 7% inférieure à celle d’aujourd’hui, à cause du vieillissement. Pour répondre à ce défi économique, les institutions européennes et internationales, comme les milieux politiques européens, en appellent à l’immigration. Nous verrons plus bas que cette solution est un remède pire que le mal.

Extension du domaine de l’islam

En 2007, le Zentralinstitut Islam Archiv Deutschland estimait à 16 millions le nombre de musulmans dans l’UE (7% de la population) donc 5,5 millions en France, 3,5 millions en Allemagne, 1,5 en Grande Bretagne et un million en Italie comme aux Pays-Bas. Du fait des flux migratoires incontrôlés et en accélération depuis cette date, composés en grande majorité de musulmans qui, en outre, ont une natalité bien supérieure à celle des Européens, ces chiffres doivent être multipliés au moins par deux ; d’autant plus que le nombre de musulmans est systématiquement sous-estimé par les autorités qui truquent les statistiques pour ne pas donner raison aux partis ”populistes” honnis. Le nombre de musulmans dans l’Union européenne dépasse très probablement les 30 millions – environ 15% de la population– et l’islam est la seconde religion après le christianisme. Le premier progresse très rapidement, le second décline.

De plus, les musulmans, en accroissement constant, ont une structure démographique nettement plus jeune et prolifique. Et il ne s’agit pas d’un islam tiède, ”sociologique”, mais de plus en plus radical, conquérant, offensif. Le risque d’attentats djihadistes, en hausse continue et qui vont évidemment se multiplier, sans que cela n’incite le moins du monde les gouvernements européens décérébrés à stopper les flux migratoires invasifs, n’est pas pourtant le plus grave. Le plus grave, c’est l’islamisation à grande vitesse des pays européens, la France en premier lieu, ce qui constitue une modification inouïe du soubassement ethno-culturel de l’Europe, surgie avec une rapidité prodigieuse en à peine deux générations et qui continue irrésistiblement dans l’indifférence des oligarchies.

Ce bouleversement est beaucoup plus inquiétant que la foudroyante conquête arabo-musulmane des VIIe et VIIIe siècle, du Moyen-Orient, de l’Afrique du Nord et d’une partie de l’Europe méditerranéenne, essentiellement militaire. Car, à l’époque, les Européens avaient de la vigueur et une capacité démographique, qui a permis de limiter puis de repousser l’invasion. Aujourd’hui, il s’agit de la pire des invasions : apparemment pacifique, par le bas, reposant sur le déversement démographique migratoire.

Mais elle n’est pas si pacifique que cela. Bien que les masses de migrants clandestins, jamais contrés ni expulsés, aient d’abord des motivations économiques ou le désir de fuir l’enfer de leur propre pays (pour l’importer chez nous), ils sont instrumentalisés par des djihadistes qui n’ont qu’un seul objectif : la conquête de l’Europe et sa colonisation par l’islam, réponse cinglante au colonialisme européen des XIXe et XXe siècle.

Les plus lucides sont, comme bien souvent, les Arabes eux-mêmes. Mashala S. Agoub Saïd, ministre du Pétrole du gouvernement non reconnu de Tripoli (Libye) déclarait au Figaro (02/06/2015), à propos des foules de migrants clandestins qui traversent la Méditerranée : « le trafic est entretenu par les islamistes qui font venir les migrants de toute l’Afrique et du Moyen Orient.[…] Daech enrôle les jeunes, leur enseigne le maniement des armes, en échange de quoi l’État islamique promet à leur famille de faciliter leur passage de la Méditerranée pour entrer en Europe. » Il y a donc bel et bien une volonté d’invasion de l’Europe, parfaitement corrélée au djihad mené en Syrie et en Irak, et au recrutement de musulmans d’Europe. Le but est de porter la guerre ici même. En s’appuyant sur des masses de manœuvre toujours plus nombreuses installées en UE.

La possibilité du djihad en Europe

Il faut s’attendre, si rien ne change, à ce que, au cours de ce siècle, une partie de l’Europe occidentale ressemble à ce qu’est le Moyen Orient aujourd’hui : le chaos, une mosaïque ethnique instable et ingérable, le ”domaine de la guerre” (Dar-al-Suhr) voulu par l’islam, sur fond de disparition (de fonte, comme un glacier) de la civilisation européenne ; et bien entendu, de paupérisation économique généralisée.

Un signe avant-coureur de la future et possible soumission des Européens à l’islam et de leur déculturation (infiniment plus grave que l’”américanisation culturelle”) est le nombre croissants de convertis. Exactement comme dans les Balkans du temps de la domination des Ottomans : la conversion à l’islam relève du ”syndrome de Stockholm”, d’un désir de soumission et de protection. Selon l’Ined et l’Insee (chiffres, comme toujours, sous-estimés) il y aurait déjà en France entre 110.000 et 150.000 converti(e)s au rythme de 4.000 par an.

Le converti fait allégeance à ses nouveaux maîtres et, pour prouver son ardeur de néophyte, se montre le plus fanatique. Presque 20% des recrutés pour l’équipée barbare de l’État islamique (Daech) sont, en Europe, des convertis. Ils sont issus des classes moyennes d’ancienne culture chrétienne– jamais juive. On remarque exactement le même symptôme – de nature schizophrène et masochiste– que dans les années 60 et 70 où les gauchistes trotskystes ou staliniens provenaient de la petite bourgeoisie. Il faut ajouter que les jeunes filles autochtones qui se convertissent à l’islam, dans les banlieues, le font par peur, pour ne plus être harcelées. Summum de l’aliénation.

Un sondage de l’institut britannique ICM Research de juillet 2014 fait froid dans le dos. Il révèle que l’État islamique (Daech), dont la barbarie atteint des sommets, serait soutenu par 15% des Français (habitants de la France, pour être plus précis) et 27% chez les 18-24 ans ! Qu’enseignent ces chiffres ? D’abord qu’une majorité des musulmans présents en France ne sont pas du tout des ”modérés” mais approuvent le djihad violent. Ensuite que 27% des ”jeunes” approuvent Daech ; ce qui donne une idée de l’énorme proportion démographique des jeunes immigrés musulmans en France dans les classes d’âge récentes, peut-être supérieure déjà à 30%. Enfin, comme le note Ivan Rioufol (Le Figaro, 05/06/2015) à propos de ce « stupéfiant sondage », il est possible que l’ « islamo-gauchisme » de jeunes Européens de souche, convertis ou pas, expliquent ces proportions, mais, à mon avis, pas entièrement.

Bien entendu, pour casser le thermomètre, l’oligarchie politico-médiatique a enterré ce sondage, photographie très ennuyeuse de la réalité, ou répète qu’il est bidon. On se rassure et l’on ment – et l’on se ment – comme on peut. Nous sommes assis sur un tonneau de poudre. L’”assimilation” et l’”intégration” ne sont plus que des contes de fées. L’incendie est aux portes.

Seule solution : la forteresse Europe.

La troisième menace qui plombe les Européens vient d’eux mêmes, de leur anémie, plus exactement celle de leurs dirigeants et intellocrates qui, fait inouï dans l’histoire, organisent ou laissent faire depuis des décennies, l’arrivée massive de populations étrangères (n’ayant plus rien à voir avec de la ”main d’œuvre”) souvent mieux traitées par l’État que les natifs. L’idéologie de l’amour inconditionnel de l’ ”Autre”, préféré au ”proche”, cette xénophilie, gouverne ce comportement suicidaire et provient d’une version dévoyée de la charité chrétienne.

La mauvaise conscience, la repentance, la haine de soi (ethnomasochisme) se conjuguent avec des sophismes idéologiques dont les concepts matraqués sont, en novlangue : ouverture, diversité, chance-pour-la-France. vivre–ensemble, etc. Bien que le peuple de souche n’y croie pas, l’artillerie lourde de l’idéologie dominante paralyse tout le monde. D’autant plus que, comme le démontre un dossier de Valeurs Actuelles (04–10/06/2015), la liberté d’expression sur les sujets de l’immigration et de l’islam est de plus en plus réprimée. Exprimer son opinion devient risqué, donc on se tait, le courage n’étant pas une vertu très partagée. Partout, les musulmans et autres minorités – qui demain n’en seront plus – obtiennent des privilèges et des exemptions illégales ; partout ils intimident ou menacent et l’État recule.

Donc, pour l’instant, la solution de l’arrêt définitif des flux migratoire, celle du reflux migratoire, de l’expulsion des clandestins, de la contention et de la restriction de l’islamisation n’est pas envisagée par les dirigeants, bien que souhaitée par les populations autochtones. Ce qui en dit long sur notre ”démocratie”. Mais l’histoire est parfois imprévisible…

Compenser le déclin des populations actives européennes par une immigration accrue (solution de l’ONU et de l’UE) est une aberration économique. Le Japon et la Chine l’ont compris. La raison majeure est que les populations immigrées ont un niveau professionnel très bas. La majorité vient pour être assistée, pour profiter, pour vivre au crochet des Européens, pour s’insérer dans une économie parallèle, bas de gamme voire délinquante. Il n’y a aucun gisement économique de valeur chez les migrants, qui coûtent plus qu’ils ne produisent et rapportent, sans parler du poids énorme de la criminalité, à la fois financier et sociologique. Les exceptions confirment la règle.

Le choix de l’aide massive au développement pour l’Afrique, qui stopperait l’immigration (thèse de J-L. Borloo), est absurde et s’apparente à un néo-colonialisme qui n’a jamais fonctionné. Pour une raison très simple : on aura beau investir des milliards en Afrique et au Moyen-Orient, ça n’empêchera jamais les guerres endémiques, l’incurie globale de ces populations à se gouverner, leurs ploutocraties de voleurs et de tyrans à prospérer et leurs populations à rêver d’Europe et à fuir. C’est atavique. Et les rêves idiots, américains et européens, de conversion à la ”démocratie” de ces peuples s’écrasent contre le mur du réel.

La seule solution est donc la loi du chacun chez soi, ce qui supposerait un abandon (révolutionnaire) de l’idéologie des Droits de l’homme qui est devenue folle. Cela nécessiterait l’arrêt de la pompe aspirante des assistances et aides multiples. Tout migrant qui entre en Europe (soit en mode ”boat people”, soit par avion avec un visa) ne devrait bénéficier d’aucun droit, d’aucune aide, aucune subvention ; il serait immédiatement expulsé, s’il est illégal, comme cela se pratique dans 90% des pays du monde membres de l’ONU. Ces mesures sont beaucoup plus efficaces que la protection physique des frontières. Sans cette pompe aspirante de l’Eldorado européen, il n’y aurait aucun boat people en Méditerranée ni de faux touristes qui restent après expiration de leur visas ou de pseudo réfugiés qui demeurent après le rejet de leur demande d’asile.

Argument idiot de la vulgate du politiquement correct : mais l’Europe va s’enfermer dans les bunkers de frontières ! Oui. Mieux valent les frontières fermées que le chaos des frontières ouvertes. La prospérité, la puissance, l’identité, le rayonnement n’ont jamais dans l’histoire été produits par des nations et des peuples ouverts à tous les vents. De plus, politiciens et intellectuels assurent que la cohabitation ethnique se passe parfaitement bien, ce qui est vrai dans les beaux quartiers où ils résident (et encore…) et où les allogènes sont très peu nombreux, mais totalement faux dans le reste du pays. Le mensonge, le travestissement de la réalité ont toujours été la marque des majordomes des systèmes totalitaire : ”tout va bien, Madame la Marquise”.

Le Tribunal de l’Histoire ne fait pas de cadeau aux peuples qui démissionnent et surtout pas à ceux qui laissent une oligarchie suivre une politique radicalement contraire à leurs souhaits, méprisant la vraie démocratie. Le principe de responsabilité vaut pour les nations autant que pour les individus. On ne subit que ce à quoi l’on a consenti. Face à ces menaces, pour de simples raisons mathématiques et démographiques, il faut prendre conscience qu’il est encore temps mais pour peu de temps encore. Il faut se réveiller, se lever, se défendre. Après, ce sera plié. Le rideau tombera.

del.icio.us

del.icio.us

Digg

Digg

De islam is daar een extreme exponent van, zie Boko Haram, maar ook het ‘blanke’, Europese vooruitgangsdenken van de pionier-ontdekkingsreiziger is mannelijk-imperialistisch. Neen, we huwen geen kindbruidjes (meer) uit, maar de onderliggende macho-cultuur is ook de onze. Poetin, Assad, maar ook Juncker of Verhofstadt of Michel, kampioenen van de democratie, zijn in hetzelfde bedje ziek: voluntaristische ego’s die’ willen ‘scoren’ en van erectie tot erectie evolueren. Altijd is er een doel, een schietschijf, een trofee.

De islam is daar een extreme exponent van, zie Boko Haram, maar ook het ‘blanke’, Europese vooruitgangsdenken van de pionier-ontdekkingsreiziger is mannelijk-imperialistisch. Neen, we huwen geen kindbruidjes (meer) uit, maar de onderliggende macho-cultuur is ook de onze. Poetin, Assad, maar ook Juncker of Verhofstadt of Michel, kampioenen van de democratie, zijn in hetzelfde bedje ziek: voluntaristische ego’s die’ willen ‘scoren’ en van erectie tot erectie evolueren. Altijd is er een doel, een schietschijf, een trofee.

Et oui, les homosexuels ont droit au respect et non, les gay-prides n’inspirent pas le respect. Bref, le mieux est l’ennemi du bien, trop c’est trop, et quand on en a assez, quand on en est gavé, on en a la nausée et on éprouve l’effet fed up… qui entraine l’hostilité… qui finit par nourrir cette homophobie qu’on prétend combattre.

Et oui, les homosexuels ont droit au respect et non, les gay-prides n’inspirent pas le respect. Bref, le mieux est l’ennemi du bien, trop c’est trop, et quand on en a assez, quand on en est gavé, on en a la nausée et on éprouve l’effet fed up… qui entraine l’hostilité… qui finit par nourrir cette homophobie qu’on prétend combattre. Idem à l’égard de l’islam. Dans les années 50 nous habitions au Congo où il y avait une communauté sénégalaise musulmane qui était fort respectée car c’étaient des personnes “sérieuses”. Pendant mes séjours au Pakistan dans les années 90 j’ai rencontré des musulmans aussi pieux que nos catholiques, normalement pieux et pas exhibitionnistes pour un sou. J’ai été séduite par les Pakistanais parce que c’étaient des personnes sérieuses, respectueuses, de confiance, à tel point que j’y ai voyagé seule en toute sérénité. Fatalement je me suis intéressée à l’islam et ai même entrepris d’apprendre l’arabe. Mais, quand on a commencé à ne plus parler que de foulards, burqas, mosquées, halal, ramadan, égorgements en place publique et prières de rues, piscines, lisez le rapport

Idem à l’égard de l’islam. Dans les années 50 nous habitions au Congo où il y avait une communauté sénégalaise musulmane qui était fort respectée car c’étaient des personnes “sérieuses”. Pendant mes séjours au Pakistan dans les années 90 j’ai rencontré des musulmans aussi pieux que nos catholiques, normalement pieux et pas exhibitionnistes pour un sou. J’ai été séduite par les Pakistanais parce que c’étaient des personnes sérieuses, respectueuses, de confiance, à tel point que j’y ai voyagé seule en toute sérénité. Fatalement je me suis intéressée à l’islam et ai même entrepris d’apprendre l’arabe. Mais, quand on a commencé à ne plus parler que de foulards, burqas, mosquées, halal, ramadan, égorgements en place publique et prières de rues, piscines, lisez le rapport

This lie is manifestly not sustainable for French literature, where engagement in politics was as common on the right as on the left. The names of Maurice Barrès and Charles Maurras will certainly live for so long as there is a French nation. Both attained the highest levels of literary aesthetic, though neither is nor should be immune from criticism. Maurice Barrès’s desire to see his native Lorraine returned to France led him to impassioned public support of the bloodshed of the First World War that won him the terrible sobriquet of le rossignol des carnages (the nightingale of carnage), and was not an advert for nationalism, while Maurras wished to see an end to the Republic, but failed to provide decisive leadership at a crucial moment in February 1934, when the conjunction of political circumstances was favourable to his wishes, so demonstrating that he was not the man of destiny that his followers thought him.

This lie is manifestly not sustainable for French literature, where engagement in politics was as common on the right as on the left. The names of Maurice Barrès and Charles Maurras will certainly live for so long as there is a French nation. Both attained the highest levels of literary aesthetic, though neither is nor should be immune from criticism. Maurice Barrès’s desire to see his native Lorraine returned to France led him to impassioned public support of the bloodshed of the First World War that won him the terrible sobriquet of le rossignol des carnages (the nightingale of carnage), and was not an advert for nationalism, while Maurras wished to see an end to the Republic, but failed to provide decisive leadership at a crucial moment in February 1934, when the conjunction of political circumstances was favourable to his wishes, so demonstrating that he was not the man of destiny that his followers thought him.  While undoubtedly the Republican side in the Spanish Civil War attracted more writers and artists than did the cause of national Spain, there were some notable supporters of the Francoist cause. Unsurprisingly Ezra Pound was parti pris on the right side. Pound merits a whole talk of his own and I will not attempt the hopeless task of precising his life and work in the time available to me this afternoon. I will however mention Roy Campbell.

While undoubtedly the Republican side in the Spanish Civil War attracted more writers and artists than did the cause of national Spain, there were some notable supporters of the Francoist cause. Unsurprisingly Ezra Pound was parti pris on the right side. Pound merits a whole talk of his own and I will not attempt the hopeless task of precising his life and work in the time available to me this afternoon. I will however mention Roy Campbell.  Henry Williamson was another author who was deeply politically engaged in the most controversial way, but whose reputation has been somewhat sanitised by excessive focus on his nature writing, especially his great success, Tarka the Otter, rather than his deeply political and semi-autobiographical cycle of novels A Chronicle of Ancient Sunlight. It tells the story of his South London boyhood, his experiences at the front in the First World War veteran, and his progressive evolution into that apparent contradiction in terms, a pacifiist and a fascist, and his complex relationship with Sir Oswald Mosley, an idealised version of whom appears as Sir Hereward Birkin, the leader of the Imperial Socialist Party, based on the British Union of Fascists.

Henry Williamson was another author who was deeply politically engaged in the most controversial way, but whose reputation has been somewhat sanitised by excessive focus on his nature writing, especially his great success, Tarka the Otter, rather than his deeply political and semi-autobiographical cycle of novels A Chronicle of Ancient Sunlight. It tells the story of his South London boyhood, his experiences at the front in the First World War veteran, and his progressive evolution into that apparent contradiction in terms, a pacifiist and a fascist, and his complex relationship with Sir Oswald Mosley, an idealised version of whom appears as Sir Hereward Birkin, the leader of the Imperial Socialist Party, based on the British Union of Fascists.